When people talk about home theater, their thoughts automatically run to pharaonic dedicated rooms, big screens, sound systems with dozens of speakers; but how did what has now become an entire market segment begin? To answer that, we have to go back 50 years.

If the cinephiles of the 1970s had been able to time travel to the present day, they would probably have been astounded to admire the quality available in today’s cinemas, as well as in home theaters and media rooms; even a smartphone movie possesses resolution and detail unimaginable for that era. But it wasn’t easy: the road that led us to today’s technological marvels required a huge series of small and large innovations-and especially new ideas-that, settling on the existing ones, produced the results we see today, in some cases superior even to the cinematic experience.

In those days, sound in the cinema was strictly mono. Dolby® Stereo was introduced in 1975 and had its real breakthrough with the release of Star Wars in 1977. In the space allotted for the conventional mono track there were two soundtracks that could carry not only left and right channel information, but also–through a so-called matrix encoding process–a third center screen channel, as well as a fourth surround channel for ambient sound and special effects.

At the turn of the 1970s and 1980s, it was not uncommon to admire the tag line “70mm and 6-track Dolby Stereo” embedded on posters and newspaper advertisements to publicize high and ultra-high budget films. At the time, that was the most cutting-edge cinematic experience one could have: it was pure science fiction.

The Beginnings of Home Theater (1980s)

When Bill Crutchfield founded his eponymous U.S. company in 1974, for a long time he focused on simply reselling car radios. Then he slowly added novelties to the catalog: in 1981, for example, there were still built-in audio systems and portable devices, but within a couple of years, the consumer electronics scenario changed dramatically with the introduction of VCRs and stereo TVs, and that’s when the roots of the home theater phenomenon could be glimpsed.

However, it was not until 1983 that electronics catalogs began to offer products similar in concept to the equipment of today; Crutchfield, a pioneer at the time, launched the “Pleasure Center” that year, which today as a brand appears somewhat unfortunate. But the idea was great because it included “a 19-inch color, high-resolution TV, a stereo VCR, a stereo video disc player, a stereo AM/FM receiver, and a cassette player.” All purchasable separately or in an integrated module and optimized to work together, at a cost of $3,400 or about 7,000 today. It is practically the forerunner of modern home theater systems.

Of course, today with the same amount of money you can take home much more and much better. LCD or OLED TVs that exceed 100″, screens with 4K and 8K resolutions, 4K digital projectors and AV receiver capable of handling dozens of speakers simultaneously and subwoofers, with volumetric and spatial effects.

These are technologies that rival and in many cases exceed -excluding room size- the features offered by most cinemas. But in the 1980s it was a different story, and to see significant changes one has to wait until the late 1990s, when there was a sudden leap forward.

Evolution of Audio (1980s-90s)

On paper, Dolby® Surround was introduced in cinemas in the mid-1970s but it actually took over 10 years to become a viable technology for home theater as well. The introduction of HiFi VCRs, which worked on the full 20-20,000 Hz frequency set, made it possible to make the big leap and move away from mono, which was instead constrained to the stringent 70-10,000 Hz.

In 1987, to be clear, A/V receivers with built-in Dolby Surround support existed all right, but they could literally be counted on the tips of one’s fingers. There were also Dolby processors sold separately that could be added to existing configurations to create surround sound, but these were rare, expensive, and pathfinder commodities.

Dolby Surround, at that time, had only 4 channels: the left and right fronts, a center channel for dialogue, and finally a surround channel split and sent to a pair of speakers used specifically for the purpose. It worked and made the point, but it almost resembled a wish-but-don’t-please.

Also that year, Dolby launched Pro Logic, which-instead of a completely different system-introduced an improved method for processing Dolby Surround tracks. By modulating the so-called “Surround Receiver Directioning Logic,” it allowed the volume of each channel to be raised or lowered independently, which in turn allowed it to provide more precise spatial placement of dialogue, music and sound effects.

The Dolby Digital Revolution (1990s)

Despite a brand name that evokes superior technologies, Dolby Surround and Dolby Pro Logic were based on analog audio; the idea was to squeeze four sound channels into two, then unpack them upon playback through the audio processor.

The transition to digital came only with Dolby Digital, officially launched in 1991; this protocol uses digital audio to create surround sound on six separate channels: right front, left front, center, independent surround in and left front, plus an LFE (low-frequency) channel to be routed to a subwoofer.

The separation of the channels, the much more favorable signal-to-noise ratio, and the extremely precise spatial placement of the sound effects made it a system capable of making all the difference in the world as far as overall sound quality was concerned.

The first film to implement it was Batman – The Return, in 1992. And although technically Dolby Digital tracks had already landed in home theaters through laserdisc-the first optical disc video recording standard created in 1978-it was not until the launch of DVD in 1997 that the format really took hold.

Dolby Digital in fact constituted the standard audio format for DVDs. The first Dolby Digital-compatible components were stand-alone processors to be connected to existing AV receivers; but it did not take long for receivers with built-in Dolby Digital to land on the market.

DVD Revolution

The event that changed everything in the world of home theater occurred in 1997, with the introduction of DVDs. A technology capable of conveying a significantly higher quality video stream (720 x 480 pixels, twice as large as VHS) and, most importantly, excellent 5.1 Dolby® Digital and/or DTS audio.

A single DVD disc could accommodate up to 2 hours of video and that’s nothing; the real side effect of optical media was something else: there was no need to rewind the tape at the end.

It took only two years to get to DVD-Audio and SACD (Super Audio CD), and although technically most of these discs were stereo (especially in the beginning), these were the first real attempts to bring quadraphony and multichannel surround music into homes.

DVD-Audio died shortly thereafter, but SACD is still available today, albeit to a small community of audiophiles.

HD DVD e Blu-ray

The last evolutionary leap, before the streaming services of our time, occurred a few years ago, in 2006, when two different standards of high-resolution discs faced each other on the market: on one side was HD DVD, developed by a consortium of hardware and software manufacturers headed by Toshiba and Warner Media; on the other side was Sony’s Blu-ray Disc.

Both formats could hold much more data than a traditional DVD, thanks to the development of similar, parallel technology. Instead of a red laser, a blue beam capable of reading much smaller dots on the surface of the disc was used. This gimmick enabled the milestone of full HD 1080p with lossless audio thanks to Dolby® TrueHD and DTS-HD Master Audio.

To this day, only Blu-Ray is still with us, while HD DVD was officially retired in 2008.

One of the problems introduced with the new generation players, however, was how to convey such a large data stream to the TV or receiver without losing quality; with analog cables, in fact, it was simply impossible. That is why HDMI was developed, which is still in use today in its most up-to-date versions.

Introduced in 2002, this type of all-digital connection was able to carry the audio and video signal of optical discs, in full copyright compliance and with a single cable. The latest version, 2.1, is designed to handle 8K.

The Evolution of HD TVs & Projectors

In general, it can be said that TVs and projectors have not kept pace with high-definition digital entertainment; however, the past 20 years have seen a major transformation in the home as well.

Until the late 1990s, televisions were all based on CRT technology, that is, the old cathode ray tube. CRTs were capable of producing good high-definition images-for the time-but the technology needed to handle the larger screens was expensive, and in any case we are talking about panel sizes that were quite a bit smaller than what we consider the norm today. In addition, CRT systems were large, heavy, and required constant maintenance.

In 2000, very thin flat-screen TVs based on plasma or LCD technology began to appear on the market, which, at least for the early days, were sold in stores side by side with the old CRTs. In any large electronics store it was easy to find them at the same time:

- CRT TV: Very low prices, but vertical resolution of only 330 lines.

- E-DTV: Aggressive pricing, slightly better resolution than 480p.

- HD-Ready TVs: More expensive, but less expensive than HDTVs, they supported 720p and/or 1080i resolutions (often not quite as well as expected), however they did not integrate an HD receiver.

- HD TVs: They cost a lot but supported i720p and 1080i perfectly, plus they had a built-in HD decoder to receive broadcasts in high resolution.

Flat TVs of those years still had a screen resolution of 720p or 1080i with a built-in tuner for over-the-air HD broadcasts; the transition from the outdated 4:3 to the 16:9 widescreen format was underway, and for a few years the two systems had to coexist. So much so that one the most popular connector, even on HD TVs costing thousands of Euros, continued to be analog; a few manufacturers paired it with DVI, also used on computer monitors, but it was short-lived: HDMI was in fact just around the corner.

And although rear-projection TVs competed for the record of best visual quality with Plasma and LCD-based models, they were also much bulkier; and they soon ended up giving way to competing, thinner and more aesthetically pleasing technologies.

At this point in the evolution, the yardstick by which the performance of a TV was measured was full HD 1080p; and that is when projectors began to become increasingly popular. With the embrace of digital, they became cheaper and, above all, less complicated to operate and maintain.

To get to home viewing that closely resembles cinema viewing, however, one has to wait for the arrival of HDTV and the total shutdown of historic analog terrestrial television, which took place on July 4, 2012. A transition that, along with the spread of high-definition Blu-ray discs, triggered the series of events that brought us to the age of home theater as we know it.

The 4K era

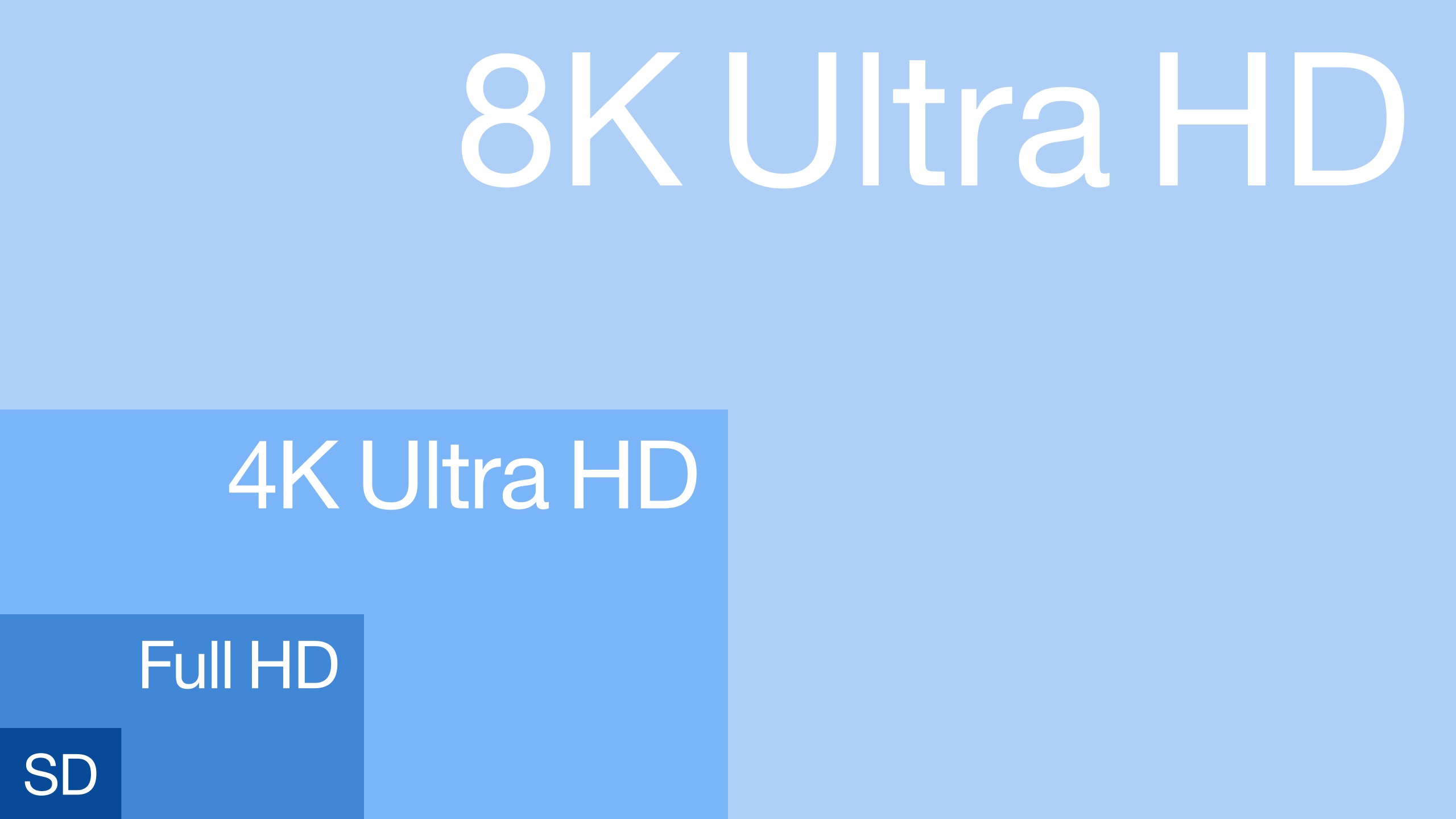

The first 4K Ultra HD TV was launched in 2013, and it was a revolution. With 4 times the resolution of Full HD, it was capable of showing more than 8 million pixels versus 2 million for 1080p.

Initially, ça va sans dire, the costs were stratospheric and 4K content virtually nonexistent, except for spectacular manufacturer demos. Fortunately, the gap was bridged by Internet streaming services such as YouTube, Netflix and Amazon Prime Video.

During the same period, projectors also jumped on the 4K bandwagon, although they were not cheap. For this reason, alongside products with native 4K resolution, there were many others being born that managed to achieve image detail very similar to 4K but starting from 1080p image processing chips. One of the techniques used was so-called “pixel-shifting.”

Later, the prices of 4K TCVs plummeted, making them an affordable luxury for anyone; 4K projectors, on the other hand, remained perched on larger sums.

The OLED Miracle

Early 4K TVs were mostly based on LCD technology, but since 2008, an alternative and competing technology called OLED had appeared on the market. Without getting too technical, the key difference between the two systems is that LCD costs less but needs a backlight system that increases the TV’s thickness and produces washed-out blacks; OLED, on the other hand, natively illuminates every pixel, leaving unneeded ones off, which means:

- Doesn’t need backlighting (so TVs are thinner)

- Produces convincing blacks

- It also consumes less (as long as very clear full-screen images are not displayed; in that case, LCD wins)

Also in 2013, the first 55-inch OLED HDTVs produced by Samsung and LG were launched, and that was also the time when we started to see the first curved screens costing 10-20,000 euros and up. In 2015 it was the turn of 4K TVs based on OLED, and the difference in quality was immediately there for all to see.

Further improvement was seen with the introduction of HDR (High Dynamic Range) in 2016, which is now available on online streaming services and 4K Ultra HD Blu-ray players; when the TV supports it and the video is in 4K HDR, contrast levels and color quality are superior even to those in the cinema. As of today, in fact, there are no theaters equipped with HDR.

Dolby Atmos

While video was making great strides, audio was not standing idly by. In fact, Dolby Atmos was introduced in 2014, followed by the DTS:X format the following year.

This is a technology capable of tying sound to the various objects that appear in the video, and moving them 360 degrees around the user. This makes it possible to simulate the roar of a helicopter as it lifts off the ground, or the roar of lightning from the upper left corner on the right corner of the floor.

To achieve the purpose, of course, you need speakers placed above and below the user’s viewpoint, and compatible content. Several can be found on Netflix and Amazon Prime Video, as well as Blu-Ray.

Home Theater: The Future is 8K’s

The first 8K TVs-also known as Ultra High Definition or UHDTVs-have popped up in 2018, and they allow viewing content at the incredible resolution of 7,680×4,320 pixels (for a total of about 33 megapixels). This means that a single UHDTV frame contains 16 times the number of pixels of its full HD counterpart.

For the time being, the prices of these TVs remain high, and 8K content unobtainable. But if you think about it, it is the exact same script that has been repeated at every evolutionary step. So it is virtually certain that, in the coming years, the refinement of production processes and the general lowering of costs for the public will lead to a greater diffusion of this technology, which in turn will convince content producers to churn out native 8K video. And the cycle will repeat itself again, only next time 16K will arrive-who knows when.

To be able to send high-resolution video and multichannel audio streams to TVs, projectors, optical players, set-top boxes, and so on, people are resorting to the HDMI cable. The HDMI standard is all-digital, and was introduced in 2002 to convey images and sound from DVD; but then, over time, its features were updated to meet the needs of new audio video formats. The latest version, HDMI 2.1, is designed from the ground up for 8K.